| ABOUT | RESEARCH | TEACHING | CONFERENCES | OTHER |

Primary research interests:

- Mathematical Foundations of Deep Learning. I develop a rigorous, theorem-driven understanding of neural networks using tools from geometry, topology and dynamical systems. My work analyzes how structural features of neural network parameterizations shape the behavior, expressivity, and training dynamics of the functions they represent. Three complementary themes motivate this research:

- ReLU neural networks and piecewise-linear geometry.

Fully-connected

multilayer perceptrons with ReLU activations coincide

with the class of piecewise linear functions and are the

most "vanilla" type of neural network. I study these

networks through the geometry of their associated

polyhedral complexes, analyzing how the arrangement of

linear regions is determined by the network's

architecture and parameters.

- Parameter space symmetries and functional

redundancy. Modern deep learning models are

highly redundant, meaning that many distinct parameter

settings yield the same realized function. I analyze the

fibers, symmetries, and quotient geometry of the

non-injective realization map from parameter space to

function space, and study how these structures influence

training dynamics, implicit bias, generalization, and

model selection.

- Topological expressivity. We do not have a good understanding of which functions can be realized by which neural network architectures. I investigate how the architecture constrains the topological properties of the functions a network can represent.

Prior to transitioning to the mathematics of deep learning, I worked in dynamical systems. Although the mathematical objects I study have shifted, my approach remains the same. In both areas, I combine geometry and dynamical perspectives with precise mathematical reasoning to illuminate deep structural phenomena about parameterized families of functions.

- Dynamical Systems.

My work on dynamical systems explores topological entropy and Thurston sets in real and complex one-dimensional dynamics, with an emphasis on how combinatorial models of interval maps and polynomials reflect and encode dynamical complexity (kneading theory). I have also worked in holomorphic dynamics and billiards.

Research advisees:

Current:

Current:

- Yaoying

Fu, Ph.D. student

Past:

- Laura

Seaberg, Ph.D., 2025

- Ethan

Farber, Ph.D., 2023

- Henry Bayly, senior thesis, 2022

- Alex Benanti, senior thesis, 2022

- Jieqi Di, scholar of the college thesis (2nd reader), 2022

- Hong Cai, geophysics MS student (2nd reader) 2022

Publications:

- Regularization Implies Balancedness in the Deep Linear

Network (with G.

Menon)

- Link to arxiv

preprint

- Empirical NTK tracks task complexity (with E.

Grigsby).

- On Functional dimension and persistent peudodimension (with E. Grigsby)

- Link to arxiv preprint.

- Hidden symmetries of ReLU neural networks (with E. Grigsby, D. Rolnick)

- Proceedings of the 40th International Conference on

Machine Learning, PMLR 202:11734-11760

- Link to arxiv preprint

and published

version.

- On the deck groups of iterates of bicritical rational maps (with S. Koch, T. Sharland)

- Link to arxiv preprint.

- Bicritical rational maps with a common iterate (with S. Koch, T. Sharland)

- International

Math.

Research Notices, Mar. 2023.

- Link to arxiv preprint.

- Functional dimension and moduli spaces of ReLU neural networks (with E. Grigsby, R. Meyerhoff, C. Wu)

- Link to arxiv preprint.

- Advances

in

Mathematics, Vol 482, Part C, Dec. 2025

- Local and global topological complexity measures of generic, transversal ReLU neural network functions (with E. Grigsby, M. Masden).

- Link to arxiv preprint.

- Existence of maximum likelihood estimates in exponential random graph models (with H. Bayly, A. Khanna).

- Link to arxiv preprint.

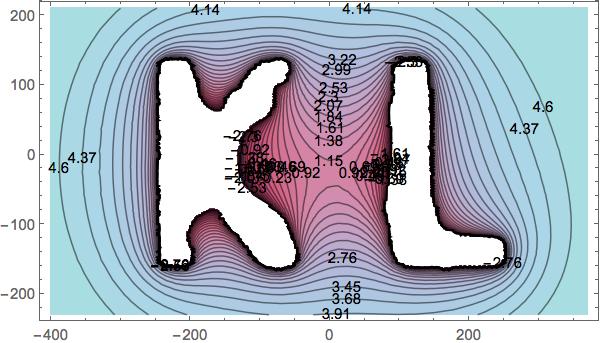

- Master Teapots and entropy algorithms for the Mandelbrot set (with G. Tiozzo, C. Wu).

- Transactions

of

the American Math. Soc., Mar. 2025

- Link to arxiv

preprint.

- On transversality of bent hyperplane arrangements and the topological expressiveness of ReLU neural networks (with E. Grigsby)

- SIAM Journal on Applied Algebra and Geometry, vol. 6, issue 2

- Links to published

version and arxiv preprint.

- A characterization of Thurston's Master Teapot (with C. Wu).

- Ergodic

Theory

& Dynamical Systems,

Nov. 2022.

- Links to arxiv preprint.

- Degree-d-invariant laminations (with W. Thurston, H. Baik, Gao Yan, J. Hubbard, Tan Lei, D. Thurston).

- What's Next?: The Mathematical Legacy of Williams Thurston, Princeton Univ. Press., 2021.

- Links to published

version and arxiv preprint.

- The Shape of Thurston's Master Teapot (with H. Bray,

D. Davis, C. Wu).

- Advances

in

Mathematics, vol 377, Jan 2021.

- Links to arxiv preprint.

- Fekete polynomials and shapes of Julia sets (with M. Younsi).

- Transactions of the American Math. Soc. v. 371, 2019

- Link to arxiv preprint.

- Convex shapes and harmonic caps (with L. DeMarco).

- Arnold Math. Journal, (2017) 1-21.

- Link to arxiv preprint.

- Read about this

in Quanta

Magazine.

- Horocycle flow orbits and lattice surface characterizations (with J. Chaika).

- Ergodic Theory & Dynamical Systems, vol 39 (6), 2019, p. 1441-1461.

- Links to published

version and arxiv preprint.

- Counting invariant components of hyperelliptic translation surfaces.

- Israel J. Math., 210 (2015), p. 125-146.

- Link to arxiv preprint.

- Shapes of polynomial Julia sets.

- Ergodic Theory & Dynamical Systems, vol 35, 06, 2015.

- Links to arxiv preprint.

- Read about this result in Scientific

American.

- A Game of Life on Penrose tilings (with D. Bailey).

- Permanent preprint. Link to arxiv preprint.

- Flat surface models of ergodic systems (with R. Trevino).

- Discrete and Continuous Dynamical Systems - A, vol 36, 10 (2016), 5509-5553.

- Links to published

version and arxiv preprint.

- Measurable Sensitivity (with J. James, T. Koberda, C. Silva, P. Speh).

- Proc. Amer. Math. Soc. 136 (2008), 3549-3559.

- Links to published

version and arxiv preprint.

- On ergodic transformations that are both weakly mixing and uniformly rigid (with J. James, T. Koberda, C. Silva, P. Speh).

- New York Journal of Math. 15 (2009), 393-403.

- Links to published

version and arxiv preprint.

- Families of dynamical systems associated to translation surfaces.

- Ph.D. dissertation, Cornell

University, 2014.

- Descriptive dynamics of Borel endomorphisms and group actions.

- Honors thesis in mathematics, Williams College, 2007.

Grants

and Fellowships:

- NSF Award #2133822: Collaborative Research,

Probabilistic, Geometric and Topological Analysis of

Neural Networks, from Theory to Applications, 2022

- NSF Award #1901247: Shapes of Julia sets, Thurston sets,

and Neural Networks, 2019

- "Women in STEM," Major Grant, Institute for Liberal

Arts, Boston College, 2018

- Research Incentive Grant, Boston College, 2018

- NSF Award #1401133: NSF Mathematical Sciences

Postdoctoral Research Fellowship, 2014

- NSF Graduate Research Fellowship, 2009

- DoD National Defense Science and Engineering Graduate

Fellowship, 2009

- U.S. State Dept. Critical Languages Scholarship

(Chinese), 2008

- Cornell University Graduate Fellowship, 2007